Learning Objectives

- Outline the general scientific method

- Identify the critical elements of strong inference from Platt’s 1962 paper.

- Contrast strong inference with common misconceptions about how the scientific method works

- Identify and describe the roles of basic elements of experimental design: dependent and independent variables, positive and negative controls, and controlling for extraneous variables.

- Differentiate among the terms scientific theories, hypotheses, laws, and facts

The scientific method

At the core of biology and other sciences lies a problem-solving approach called the scientific method. The scientific method is iterative and includes these steps:

- Make an observation.

- Ask a question.

- Form a hypothesis, or testable explanation.

- Make a prediction based on the hypothesis.

- Test the prediction.

- Iterate: use the results to make new hypotheses or predictions.

The scientific method is used in all sciences, ranging across biology, chemistry, physics, geology, and psychology. The scientists in these fields ask different questions and perform different tests. However, they use the same core approach to find answers that are logical and supported by evidence.

Scientific method example: Failure to toast

Let’s build some intuition for the scientific method by applying its steps to a practical problem from everyday life.

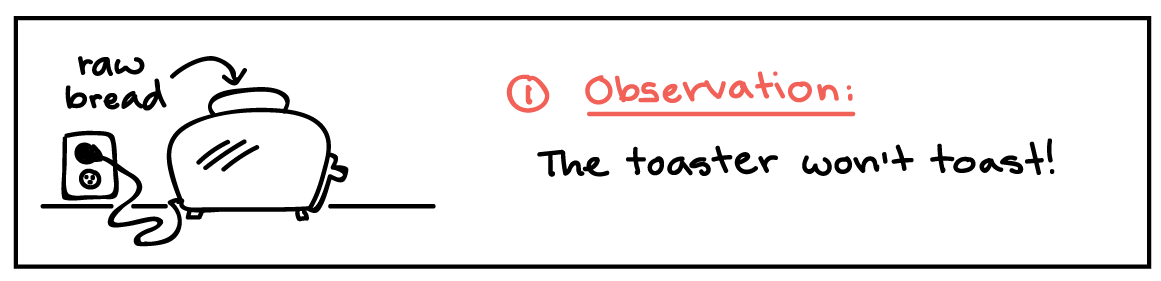

1. Make an observation.

Let’s suppose that you get two slices of bread, put them into the toaster, and press the button. However, your bread does not toast.

2. Ask a question.

Why didn’t my bread get toasted?

3. Propose a hypothesis.

A hypothesis is a potential answer to the question, one that can somehow be tested and falsified. For example, our hypothesis in this case could be that the toast didn’t toast because the electrical outlet is broken.

This hypothesis is not necessarily the right explanation. Instead, it’s a possible explanation that we can test to see if it is likely correct, or if we need to make a new hypothesis.

4. Make predictions.

A prediction is an outcome we’d expect to see if the hypothesis is correct. In this case, we might predict that if the electrical outlet is broken, then plugging the toaster into a different outlet should fix the problem. Predictions are frequently in the form of an If-then statement.

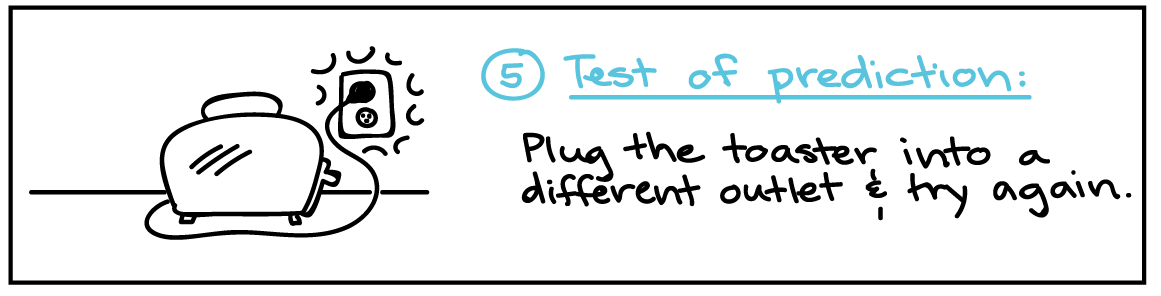

5. Test the predictions.

To test the hypothesis, we need to make an observation or perform an experiment associated with the prediction. For instance, in this case, we would plug the toaster into a different outlet and see if it toasts.

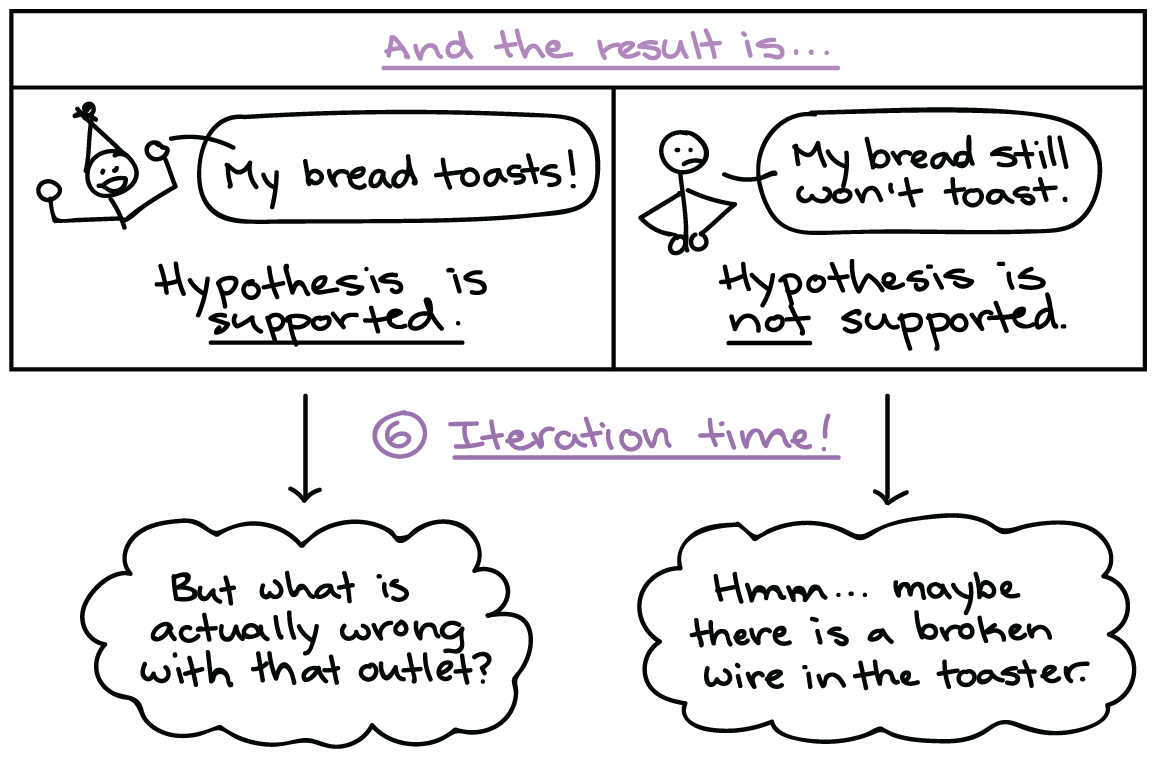

- If the toaster does toast, then the hypothesis is supported, which means it is likely correct.

- If the toaster doesn’t toast, then the hypothesis is not supported, which means it is likely wrong.

The results of a test may either support or refute—oppose or contradict—a hypothesis. Results that support a hypothesis can’t conclusively prove that it’s correct, but they do mean it’s likely to be correct. On the other hand, if results contradict a hypothesis, that hypothesis is probably not correct. Unless there was a flaw in the test—a possibility we should always consider—a contradictory result means that we can discard the hypothesis and look for a new one.

6. Iterate.

The last step of the scientific method is to reflect on our results and use them to guide our next steps.

- If the hypothesis was supported, we might do additional tests to confirm it, or revise it to be more specific. For instance, we might investigate why the outlet is broken.

- If the hypothesis was not supported, we would come up with a new hypothesis. For instance, the next hypothesis might be that there’s a broken wire in the toaster.

In most cases, the scientific method is an iterative process. In other words, it’s a cycle rather than a straight line. The result of one go-round becomes feedback that improves the next round of question asking.

Scientific Method and Strong Inference

Why are some scientists more successful than others? Is it just luck, or that some problems are just more difficult than others, or that some scientists are smarter or know more or work harder? Platt, who coined the term “strong inference,” thinks scientific success is the result of a method of systematic scientific thinking (Platt, 1964). [Note: Platt’s Strong Inference paper is required reading for students enrolled in Biological Principles! (You may need to be logged in through the institute VPN to access the paper link.)] Platt states the following methodology:

In its separate elements, strong inference is just the simple and old-fashioned method of inductive inference that goes back to Francis Bacon. The steps are familiar to every college student and are practiced, off and on, by every scientist. The difference comes in their systematic application. Strong inference consists of applying the following steps to every problem in science, formally and explicitly and regularly:

1) Devising alternative hypotheses;

2) Devising a crucial experiment (or several of them), with alternative possible outcomes, each of which will, as nearly as possible, exclude one or more of the hypotheses;

3) Carrying out the experiment so as to get a clean result;

1′) Recycling the procedure, making subhypotheses or sequential hypotheses to refine the possibilities that remain; and so on.

—Platt, 1964

Platt then highlights two critical points in this method:

- use multiple working hypotheses to avoid favoring a hypothesis and confirmation bias;

- design experiments to eliminate or “falsify” one or more alternative hypotheses.

Key to the scientific approach of testing alternative hypothesis is that science doesn’t prove things are true. Instead it can only disprove a hypothesis or idea. To convince yourself of this, here’s a fun video to illustrate the value of attempting to disprove your hypothesis:

Experimental Design

Well-designed experiments test hypotheses by attempting to falsify, meaning to disprove or eliminate, as many hypotheses as possible. Typical experiments have one or more independent variables, some factor in the experiment that is set in varying amounts by the experimenter. Examples of independent variables include time or the amounts of a particular substance added to reactions or to cell cultures. Typically, the independent variables are plotted on the x-axis of a graph. The graph’s y-axis then shows the dependent variables, which are the outcomes that depend on the independent variables.

The valid interpretation of experiments requires proper controls. Positive controls are experiments with known outcomes. Their purpose is to make sure that the instruments and reagents are all working properly. For instance, to conduct a pH test, the experimenter can use bleach as a positive control for a base or lemon juice for an acid. If an experiment with an unknown produces negative results, but the positive control produces the expected results, then we can be confident that the negative results were not due to faulty instruments or reagents.

Conversely, negative controls are experiments that should produce no result, which in science is called a negative or null result. They also guard against faulty instruments or contaminated reagents. An experiment with an unknown that produces a positive result is valid only if the negative control shows the expected negative result.

Questions for thought and discussion

Given that science is a way of knowing about the world around us, what are the applications of strong inference outside the science laboratory?

What are the similarities and differences between strong inference and clinical diagnostics or criminal investigation?

What are the limitations of strong inference and the scientific method?

Is it possible for the scientific method to definitively prove a hypothesis?

Theories, hypotheses, laws, and facts in science

These are words in every day use but they hold special meaning in science. A fact is an observation that is true. Facts have been repeatedly tested, confirmed, and are universally supported. A fact just is, it doesn’t provide justification or explanation for how or why it came to be. Here are some facts in science: Colds and flu are caused by viruses, Objects fall to the ground, Populations evolve.

A hypothesis is a proposed explanation for a phenomenon. It is sometimes referred to as an educated guess because the proposed explanation is based on observations. Hypotheses must be testable, which means they can be falsified or proven wrong. Oddly, they cannot be proven right!

A scientific law is a statement of relationship that expresses a fundamental principle of science. Laws always apply under a given set of conditions but may be limited to very specific conditions. Laws imply a causal relationship and are often based on mathematical relationships, but laws do not provide a mechanism or explanation for a phenomenon. Examples of scientific laws include the law of gravity, Newton’s laws of motion, and the laws of thermodynamics.

Finally a scientific theory is a unifying idea that explains scientific facts, laws, and observations. Theories are well-supported by multiple independent lines of evidence (well-supported hypotheses). They are well-accepted but constantly refined as more evidence is gathered and analyzed. Importantly, a theory provides mechanisms to explain how and why a phenomenon occurs. Examples of scientific theories include the Big Bang Theory, Cell Theory, and Theory of Evolution by Natural Selection.

Credible Sources and how to find them

These days, when we have a question, we turn to the internet. Internet search engines like Google can link you to almost any content, and they even filter content based on your past searches, location, and preference settings. However, search engines do not vet content. Determination of whether content is credible is up to the end-user. In an era where misinformation can be mistaken for fact, how do we know what information to trust?

The process of scientific peer-review is one assurance that scientists place on the reporting of scientific results in scientific journals like Science, Nature, the Proceedings of the National Academy of Science (PNAS), and many hundreds of other journals. In peer review, research is read by anonymous reviewers who are experts in the subject. The reviewers provide feedback and commentary and ultimately provide the journal editor a recommendation to accept, accept with revisions, or decline for this journal. While this process is not flawless, it has a fairly high success rate in catching major issues and problems and improving the quality of the evidence.

In the rare situation when a study has passed through the sieve of peer review and is later found to be deeply flawed, the journal or the authors can choose to take the unusual action to retract the work. Retraction is infrequent but does happen in science, and it is reassuring to know that there are ways to flag problematic work that has slipped through the peer review process. A prominent example of a retracted study in biology was one that erroneously linked the MMR vaccine to autism (https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2831678/). Some sectors of the public still have misconceptions about supposed linkages between autism and vaccination. The putative autism-vaccination connection is a classic case of how correlation does not imply causation, meaning that just because two events co-occur—the recommended childhood vaccination schedule and the onset of autism symptoms—does not mean that one caused the other.

With the rapid spread of SARS-CoV-2 and the Covid-19 pandemic in 2020 and beyond, the demand for scientific information about the novel coronavirus SARS-CoV-2 outpaced the rate that journals could peer-review and publish scientific research. Many research articles for SARS-CoV-2 were therefore released as preprints, meaning they have been submitted to a journal for peer-review and eventual publication, but the authors wanted to release the information for immediate use. Scientists remain skeptical of pre-prints until the peer-review process has scrutinized the presentation of data and ideas and required revision by the authors.

Outside of the scientific literature, the public has other ways to access information about current topics. In the media, journalists use published and preprint articles, press releases, interviews, public records requests, and other sources to find source information. They cite their sources when possible and are responsible to their editors for the quality and authenticity of their reporting. Some media sources have better track records than others for unbiased reporting.

The lowest bar for scrutiny and review is on the internet. Websites and social media are places where anyone can post anything and make claims that are or are not supported by evidence. As the end-users, our job is to find sources supported by evidence, cited ethically, and otherwise credibly presented. We have the responsibility to notice whether an organization is funded or motivated in ways that might generate bias in their content. We have the obligation to cross reference ideas from unvetted sources to help us establish how believable or how credible the source of information is. Science is based in evidence, and we will work this semester to identify and interpret scientific evidence.

UN Sustainable Development Goal

SDG 4: Quality Education – Distinguishing between strong and weak arguments or inferences is important for promoting critical thinking skills and developing evidence-based decision-making. These skills are critical for developing sustainably and engaging in global citizenship.

References:

Platt, John R. “Strong Inference.” In Science, New Series, Vol. 146, No. 3642 (Oct. 16, 1964), pp. 347-353 (html reprint)

Raup, David C. and Thomas C. Chamberlin. “The Method of Multiple Working Hypotheses.” In The Journal of Geology, Vol. 103, No. 3 (May, 1995), pp. 349-354 (pdf) – originally published in 1897

I highly recommend reading Science Isn’t Broken – It’s Just Hell of a Lot Harder than We Give It Credit for by Christie Aschwanden. This is a FiveThirtyEight feature on reproducibility of scientific studies, scientific misconduct, statistics, human fallibility, and the nature of scientific investigations. Has interactive analyze-it-yourself demo for readers to investigate whether the economy does better with Democrats or Republicans in power.

http://fivethirtyeight.com/features/science-isnt-broken/